Regal Decision Systems, Inc. – Baltimore (Linthicum), MD 2002–2010

Program Manager, Technical Manager

Served as project manager for multiple analysis and development efforts and program manager for several logistics task orders under an umbrella support contract: $4.7M/year, 35 employees, 3 subcontractors, and a satellite office.

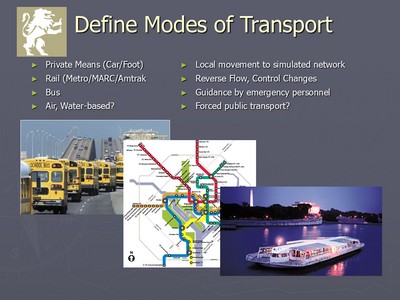

Performed management, design, analysis, data collection, presentation, and documentation functions for transportation and movement simulations in the United States, Canada, and Mexico:

- 3D pedestrian ingress/egress simulation with detailed agent and threat behaviors

- Optimized control of egress lighting for Evacuation Guidance System

- Autonomous building evacuation plug-in for Lawrence Livermore's ACATS system

- Tools to simulate land border crossings on both sides of both United States borders

- Medical Office Simulation used to analyze and streamline operations in medical practices

The individuals who interviewed and hired me were very happy that I had chosen to join them. I will always be grateful that they did, and the company was growing, but they didn't have a job to put me on right away. I spent a couple of weeks buying and configuring a new laptop and chatting with people in the office, by which time one of the owners set me up with his dentist. The idea was that I would write a simulation tool to model operations in the practice he ran with three colleagues and numerous assistants, hygienists, and administrators, and he would sell either the product or analyses based on the product to other dentists when he retired.

The dentist explained how everything worked and I figured out what data needed to be collected and created forms to collect it all. I then took the forms to the office and got several days of data compiled thanks to the gracious efforts of the staff, particularly the administrators. All of the processes were interesting, and I got quite an education about the insurance end of the business. I then set about creating a tool that would support the creation of simulations of medical offices.

Regal was created by a group of long-time simulation engineers who had split from a parent company (with that company's blessings) taking at least one of their customers with them. They later brought in another partner to join them. The company was four or five years old when I finally got there, and their specialties were operations research and discrete-event simulation. This turned out to be a different beast than continuous simulation in many ways, but I learned it in the process of building my simulation tool in a language called SLX, short for Simulation Language with eXtensibility. This was a C-based language that implemented all of the features of discrete-event operations as integrated primitive language constructs. It implemented sets, queues, ready-made distribution functions which combined with random number generation to support Monte Carlo analyses, time advances, action interlocks of various types, and other features. It enforced pointer discipline by ensuring that items on the heap could not be deallocated if any pointer was pointing to it. That is, all pointers had to be located and set to NULL before that memory could be freed. At the time I found that annoying but subsequent languages have gone even farther by doing away with explicit pointers altogether. It was not strictly object-oriented in its earliest incarnation, but it had object-like features and its creator may have extended the language since then. I know it was being discussed.

A companion product was called Proof, and it provided tools to produce extremely fast, controllable animations based on commands embedded in even extremely large text files (I've seen and generated animation files up to a gigabyte in size). Proof could incorporate a background file on top of which everything would move. This could be some sort of CAD file (Proof even provided built-in CAD-like tools that supported editing of such files) or a separate file of proof commands that created the desired background. The idea was that your SLX simulation wrote out a Proof file, then you walked through the animation to see if you were getting the results you wanted. SLX was a major update and rethinking of an older product called GPSS/H, or General Purpose Simulation Software System / High-Performance, versions of which had been around since the 1960s and which originally ran on mainframes. Most of the initial owners of the company had worked with GPSS/H and its predecessors for years.

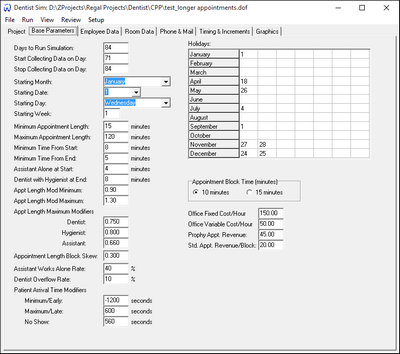

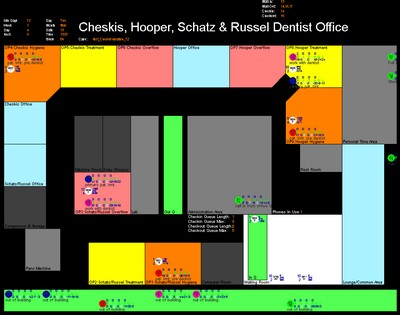

I created a framework in Borland C++ Builder 4.0 that allowed the user to enter all of the parameters that governed the simulation, including methods to enter the location of all rooms and walls and location coordinates where various personnel would perform their job functions. There were rules about who was allowed to do what with whom, where people needed to go, how long things would take, how dentists themselves would visit and revisit patients in priority order, how ancillary and administrative functions were performed, and how insurance companies handled (or tried not to handle) different kinds of submissions and questions. This information was used to initialize a detailed SLX run that simulated 84 days of operations, compiling statistics and generating Proof animation outputs as it went. The first 70 days were considered a warm-up period, where results were not compiled. This was necessary to build up queues of communications with insurance companies. A fresh set of patients arrived every day, but the administrative personnel spent a lot of their time addressing the communication backlog, and that information is not available in the beginning of a simulation run. The user could then use the wrapper program to spawn the animation viewer and also view the compiled results of each run and compare them to previous outputs. I got the thing done and reviewed to the point where the only thing left to do was get a different cover picture for the splash screen and the narrated and animated sales presentation I had put together in PowerPoint. The problem was that nobody could figure out what the actual business model should have been or how much to sell it for, so it was dropped.

A dozen years later I still remember this tool and the ways it could have been improved, especially considering everything I've learned in the interim. My m.o. is usually to remember the mistakes and things that could have been better, because those are motivation to improve for the next time. I remember the majority of things that went right but what do you learn from that? You tried to do something, it worked, on to the next thing. Years later I made friends with a different dentist who spends a great deal of effort seeking the advice of consultants and industry gurus on how to improve his practice. The insights he provided were extremely enlightening, and indicate that some major strides have been made, to the point where the operations research problem for modern dentistry has essentially been solved, at least for the current state of the technology and under the current regulations. I went to his office one day and reviewed his operations, where he can single-handedly run as many as nine chairs (which both is and isn't quite what it sounds like). None of the dentists in the original practice ran more than three chairs. The dentists I had in my youth ran no more than two although one I had in the 90s appeared to run four or five. Once the insight is explained it becomes an absolute V-8 moment. You whack your head and say, "Why didn't I see that?" It is also pretty clear, having completed Lean Six Sigma training, that the guru who came up with the main optimizations was employing classic lean thinking when he did so. It would be interesting to know if he achieved this breakthrough by pure analysis or whether he used a simulation of some kind.

The simulation parameters were defined in the wrapper program, which spawned the simulation and animation programs and allowed the user to view and compare results.

The animation allowed the user to ensure that the actors in the program were behaving as desired. This was an important part of validating each configuration.

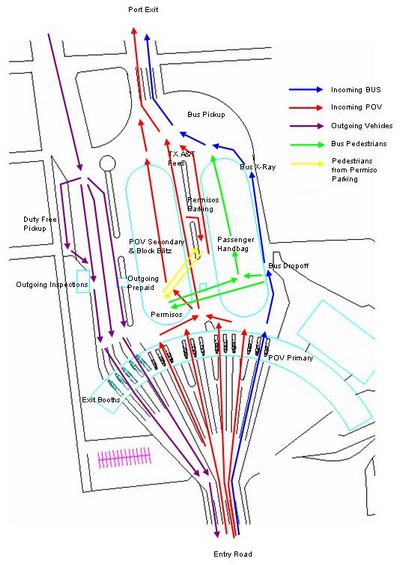

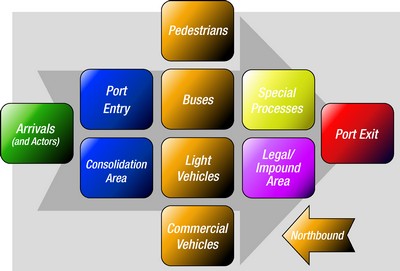

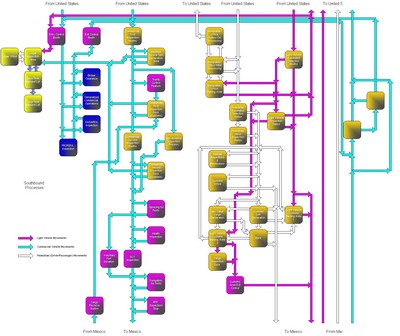

The other simulations developed by the company were in two families. Path-based simulations modeled the movement of entities on defined paths through some sort of inspection facility. Entities would enter the facility and undergo processes at different locations for different amounts of time. The time, location, and order of processes might vary based on the type of entity, the time of day or day of week, the status of special processes, and the randomly-assigned results of certain inspections. The geometry of these facilities was important because the scale of the facilities and the paths through them affected the time it took to move from place to place. It was less important to capture these times exactly than it was to capture them logically. The path networks described for motor vehicles were reasonably accurate, but the need for accuracy for some parts of the pedestrian inspection process wasn't that high. The path networks could be laid out within buildings in a way that supported the logical movements even if the layouts did not match reality. This was acceptable because the processing and queuing times were much greater than the movement time. It is also true that pedestrians tend to wander around and do things like smoke cigarettes and visit restrooms, so that behavior wasn't going to be captured anyway. This type of simulation was great for land border crossings and certain manufacturing facilities. The company won numerous contracts to examine most of the land border crossings on both the northern and southern borders of the United States. It also won contracts to build similar tools for the border crossings on the Canadian and Mexican sides of those borders, as well as Mexico's southern borders with Guatemala and Belize.

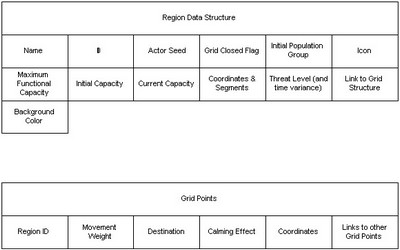

Grid-based simulations modeled the movement of entities from place to place as well, but in a way that considered how their physical movements were affected by their surroundings and the presence and movement of other entities. This technique was applied exclusively to the movement of pedestrians. Sometimes they were modeled as they moved through check-in and inspection facilities, but other times the goal was to simply model an evacuation, where the destination was some point or boundary outside of the building or region of interest.

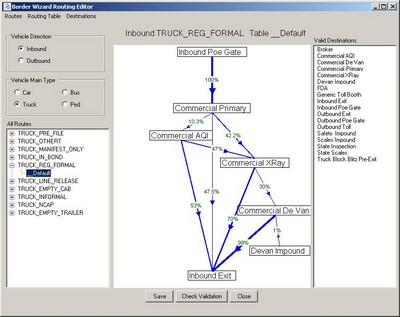

The most important path-based programs were created to model the border crossings, as described above. The first version of BorderWizard, meant to model U.S. border facilities, was undergoing one of its first expansions when I joined the company. The initial version was pretty abstract and assumed a configurable number of each type of processing station in a fixed geometry. Experience, reading, and Google Earth show that all but the simplest ports are unique. They have very different configurations to process widely varying types and volumes of traffic. One crossing is just a camera and a telephone on a stick by the side of the road, where the border-crosser is supposed to call a CBP (Customs and Border Protection) officer working at a different port nearby to verify the crossing. Another facility is a three-car ferry that crosses a narrow part of the Rio Grande. Others are bridges that carry vast numbers of commercial vehicles or and even larger number of only privately-owned vehicles. One crossing is a tunnel. Some facilities operate around the clock and some for only a few hours a day or even a week. Some may be by appointment only. A few service rail traffic. The point is that it quickly became apparent that a flexible, modular tool should be created to build the models.

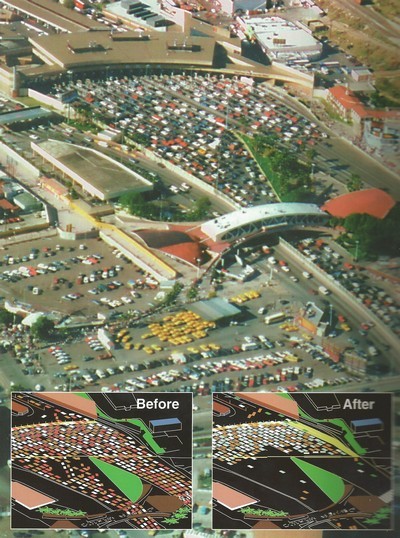

After some pilot work the tool was improved so it worked like a CAD program that allowed analysts to lay out paths and facilities. The characteristics of the facilities would be entered in other parts of the user interface, which could then be used to perform simulation runs. These generated output animations and reports with graphs and tables of results. The early versions also began to incorporate automatic verification of paths and connections. It would be way too easy to create a segment of path going the wrong way. An entity traveling on a directional path would find itself stuck and that would gum up the entire simulation. This would be very difficult to find by visual inspection, so the automated verification was a real boon. The system also had to ensure that traveling entities could get to required logical destinations. The initial discovery process seemed to indicate that certain operations were done the same way at every port, or at least at the 50 or so largest ones that were the subject of CBP's initial interest. It soon became clear that there were so many exceptions that a more general solution was needed, especially when the system was first applied to smaller ports. My personal feeling is that I would almost always aim for the most general, modular, and flexible solution from the outset, but I also appreciate that it's good to get something running so you can start learning from it. The company did end up building much more flexible and modular systems for the Mexico and Canada programs.

The BorderWizard software, used to model U.S. land border crossings, was already designed when I started at Regal, but I was the main discovery analyst for the Canada and Mexico programs. Their processes turned out to be very different than those in the U.S.

Discovery and data collection trips involved getting drawings and publicly available information before arriving, getting a tour of the facility, asking boatloads of questions about local operations, and setting up cameras to record two or three days of video of all operations. Hourly and daily counts of the different types of travelers processed were provided by the port after the visit, and process times and distributions were determined by reviewing the collected video. This also included diversion percentages of each traveler type from each process (e.g., how often does each type of traveler go to different destinations when leaving each process?). Schlepping video equipment around the country was always a hassle, but it was just part of the job.

I spent many hours watching the action from rooftops at the Peace Bridge crossing. The picture on the left is of the Proof animation output while the one on the right shows a 3D rendering created for a different analysis project.

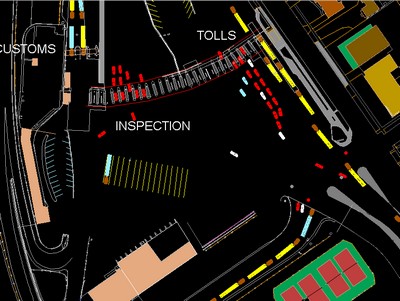

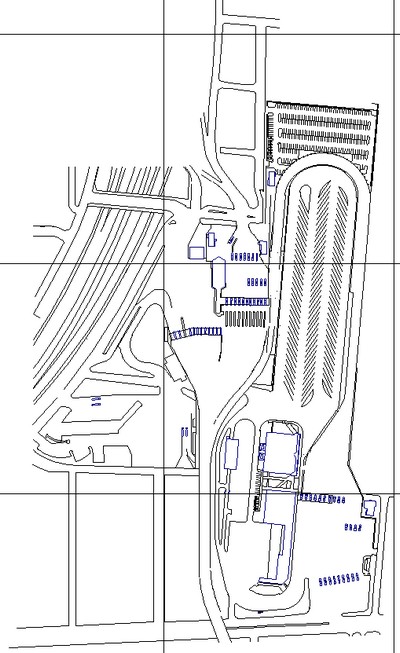

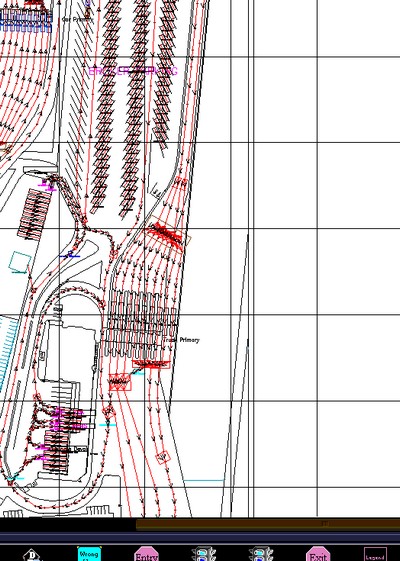

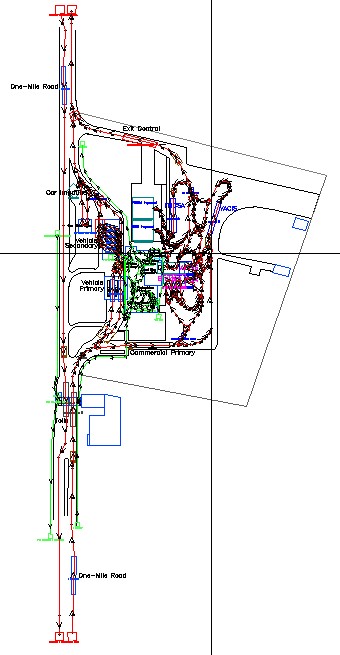

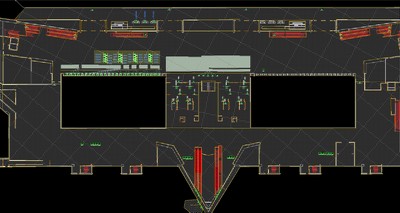

I spent a great deal of time at Ambassador Bridge in Detroit, the busiest commercial crossing on either border. It was one of the first visits I made and I did several analyses for that facility. I spent a lot of time on the rooftop of the port office there, including one memorable evening in a major fall of snow and slush. Clockwise from upper left: a CAD underlay that provides the initial background and scaling for the animation, the paths laid out on the underlay in the layout editor, and the Proof animation output.

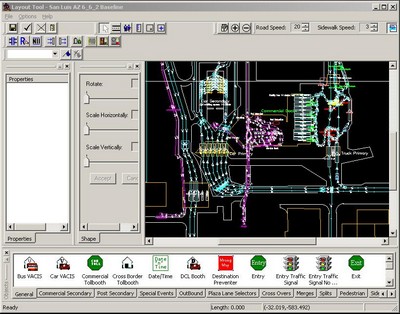

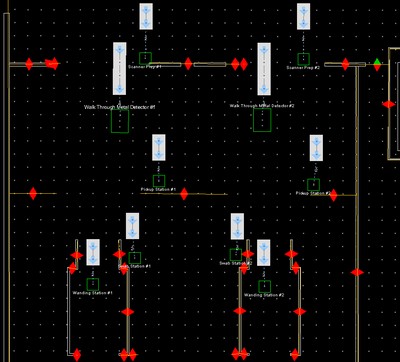

More detailed views of the routing networks defined by the layout editor, and a view of the layout editor itself. I recreated parts of the layout editor during some downtime between projects, which was one of the more enjoyable programming efforts I worked on. I was mostly an analyst and project manager during those years, so getting to write code that did something interesting was a nice break.

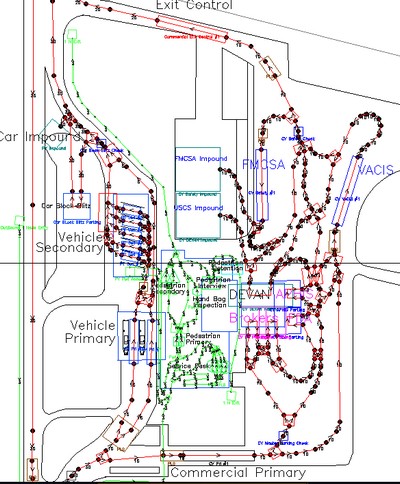

I created the major design documents for the Canadian and Mexican programs. Over the course of several visits to Ottawa and Mexico City we met with numerous government agencies to learn what their concerns were and what interest they would have in the analyses the model could carry out. We toured numerous facilities to learn how things worked. As noted above, their processes are sometimes quite different than those in place at U.S. crossings. Mexican facilities were especially complex, with over eighty different processes identified. About half of those are shown in one of the diagrams from the model requirements document I wrote, on the right.

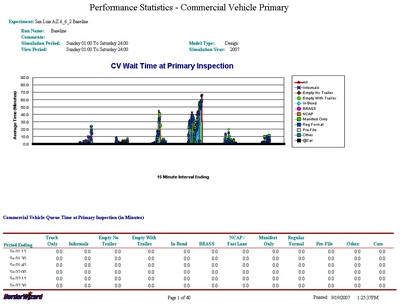

The model generated outputs which reported queue sizes and wait times at all facilities over the week of simulated time, by traveler type, in both graphical and tabular form. The ultimate means of verifying the accuracy of the created models was to compare the queues generated in the model to those observed in real life, both during data collection visits and in the collective experience of port personnel. The most complex models took nearly an hour to run, for which reason we only ran a single iteration. Some experimentation showed that running multiple iterations with different random number seeds usually had little effect on the results.

As clever as the tools were the goal was always to improve operations wherever possible. There are two basic ways to do that. One is to improve the individual processes within the facility, for example by initiating the use of electronic pre-clearance passes to greatly reduce one class of processing time. The overall effect on the system can then be assessed. The other way is to rearrange the existing processes in some way to create more tightly coupled flows and eliminate constrictions. These sorts of systemic rearrangements are characteristic of Lean analysis. I and other analysts worked with different departments within CBP, the real estate portfolio managers, and the individual port operators (private companies own some of the port facilities; government employees always perform the inspections) to perform many different analyses. The most important ones were in support of major architectural changes. I participated in one analysis that helped determine which proposed layout should be used for a new port in Maine, which has now been in operation for several years. The effects on the operations of nearby ports are sometimes analyzed as well.

Several programs were based on grid simulations. Tools were developed to analyze operations in airports, both for security inspections and for process modifications proposed by airlines. I didn't work on many airport projects but I did do discovery and data collection trips for a logical simulation. That program determined the number of line and management personnel required to support inspection operations based on the flights and passenger loads scheduled for each airline and terminal. Each day's schedule data was retrieved from the Sabre system and used by the model to determine the number of inspections that had to be performed and when. Those results were used to build schedules that provided coverage while assigning standard full-time, part-time, and partial-shift schedules. The company ultimately lost the Sabre/Staffing contract when the customer decided it needed a bigger shop with its own server management experience, something a smaller analysis shop couldn't really provide.

The airport grid models were mostly used to assess the placement of infrastructure. Other airport models were used to support staffing analyses.

I gave a presentation of the company's airport modeling capabilities at the 4th Annual Aviation Security Technology Symposium in late 2006. A lot of the information presented was fascinating but there was also evidence of a huge amount of duplication and waste. There were at least eight different groups doing analyses on TSA checkpoint operations, for example, and those were just groups participating in the conference. I shudder to think how many more might have been out there. It seemed like the Department of Homeland Security was throwing money at everyone and everything around that time.

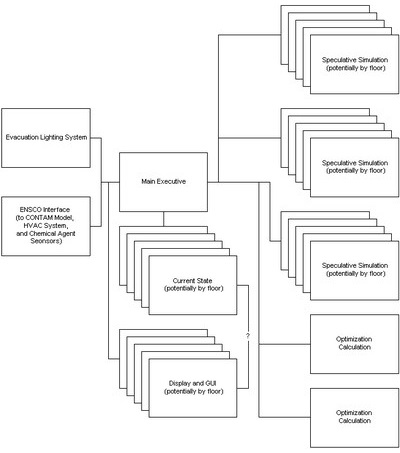

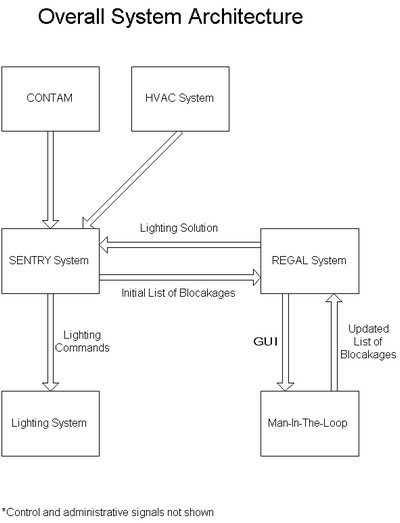

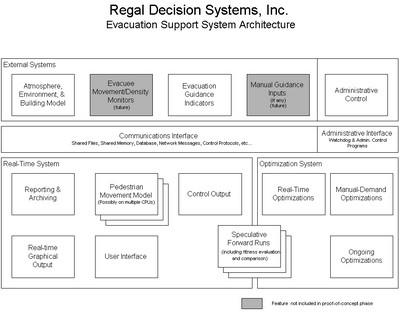

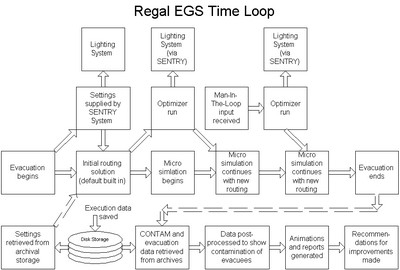

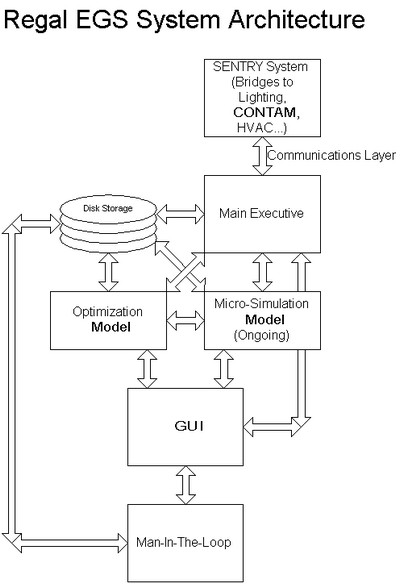

Once the grid techniques had been proven in airports we got a job as part of a large program to develop an evacuation control system that optimized the flow of occupants leaving a building while considering the location and movement of airborne threats.

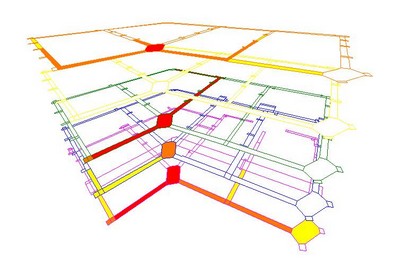

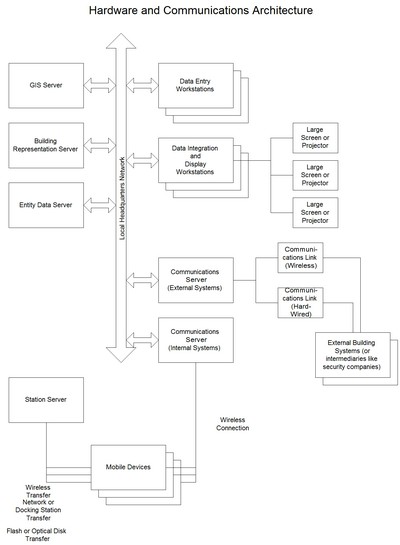

The interface to our part of the system was displayed on two monitors. Part of one display showed the evacuation solution and allowed a user to modify the direction of movement in any link (section of hallway, atrium, or vestibule) or the signals for which doors and stairwells should be used in response to newly reported information.

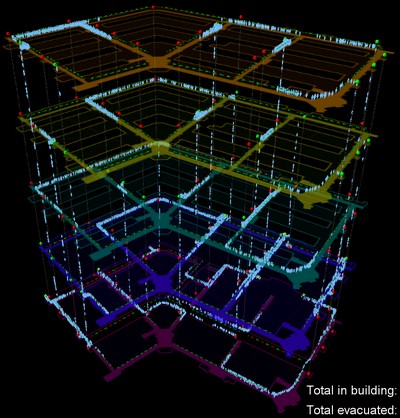

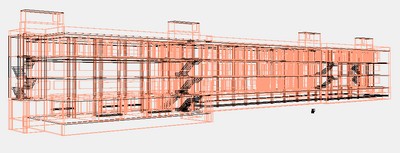

I worked on the part of the system that received the projected threat data and passed it to the optimization module so it could generate an evacuation solution that would minimize egress time and cumulative exposure to the threat. This was one of the other rare times I wrote code for Regal. Once I was sure I had it right the development team took it over and incorporated it into their system. These animations weren't a formal part of the project; I whipped them up to provide a visual means of ensuring that the threat projection made sense and that the communications worked. It was all written in Borland C++ and did not employ any third-party libraries. I included my own perspective and transform techniques to support display and rotation in 3D. Hidden surfaces were eliminated by using the Painter's algorithm; the floors were drawn from the bottom up.

I also worked on the part of the system that transferred the egress solution to the lighting system. This was a sample installation that would chase in the direction the building occupants were supposed to follow. Green or red lights above doors would indicate exits or stairs that should or should not be used.

I was co-project manager for this effort and spent a lot of time gathering information, particularly on site, learning how our partner companies' systems worked, negotiating communication formats, and working out and documenting architectures in Visio. I was our company's jobsite representative for the majority of working sessions and tests.

I was also project manager for the evacuation plug-in we built for the ACATS system for Lawrence Livermore National Labs. The system would initialize the plug-in via TCP-IP messages that sent the location of a building description file, the initial locations and characteristics of all of the building's occupants, and the destinations toward which they were supposed to evacuate. Once the simulation was initialized the plug-in automatically read the building file, which was formatted as an IFC (Industry Foundation Classes, a BIM format) model, and automatically generate movement grids on all floors and stairs. Once initialized the evacuation was started and controlled using HLA (High-level Architecture) protocols.

ACATS itself was an HLA simulation that models various types of engagements and movements. The idea was that numerous users interact in a simulated world by controlling actors and vehicles interactively in real-time. If some kinds of interactions lead to the need to evacuate a building or area, then this tool would automatically manage the movements of a large number of actors without requiring user input. If user-controlled combatants or first responders entered an area where the automated actors were moving the automatons would be aware enough to move around the user-controlled actors on the way to their specified destinations.

That project was a bit of a struggle because we were operating on opposite coasts and never got enough time to interact with the customer when their machine and software setup was actually working. An even bigger problem was that our grid simulations were all built on SLX, which required hardware license keys to work. This was expensive and also failed to meet the contract requirement that the software had to be usable in quantity and without third-party licenses. (Project managers often have to try to work with decisions made a higher levels...) Another major problem was that the data files for the example buildings we were given contained no information that allowed the grid-generator function to identify stairs. There were some nice rectangular prisms stacked together in the IFC files that looked like stairs, but trying to figure out what they were in code was just plain ugly.

The plug-in to the ACATS system would receive building descriptions based on Industry Foundation Classes (IFC) models, automatically generate movement grids on floors and stairs, create building inhabitants as defined on the fly, and have them automatically evacuate to specified locations, potentially around other entities that were moving within the building. The ACATS system itself runs on Linux, but the evacuation plug-in ran on a standard Windows machine and was integrated as part of a High-Level Architecture (HLA) federation. The initialization was accomplished through TCP-IP messaging.

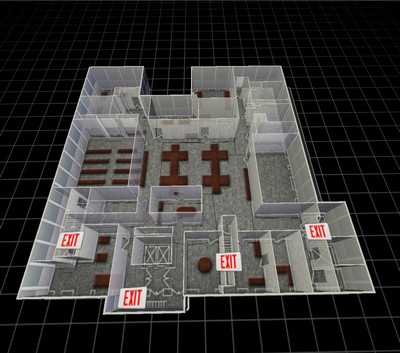

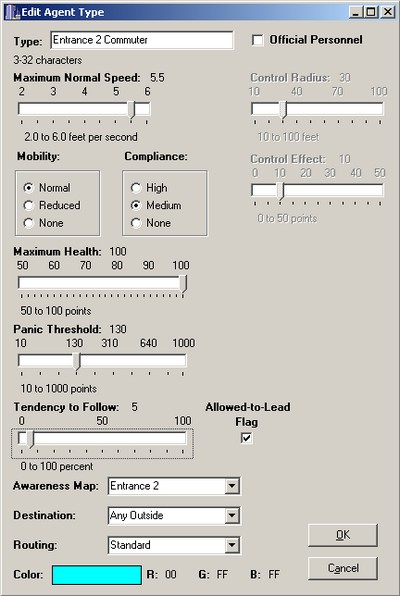

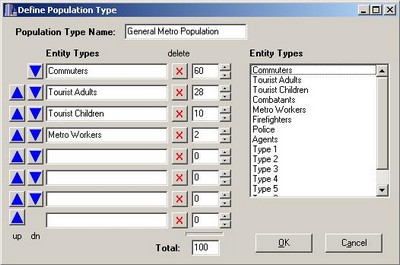

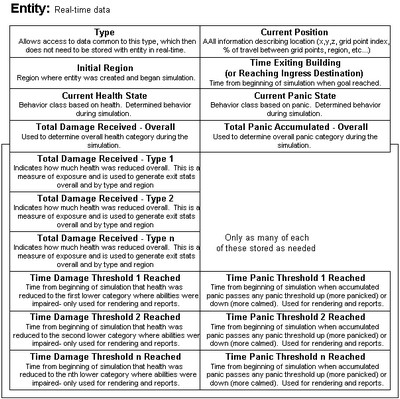

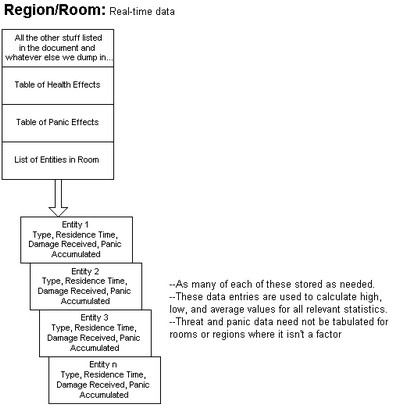

The next project we got was to build a tool that advance site reps could take to a venue and rapidly construct a grid model that would allow them to plan evacuations, entry and egress security processes, and so on. Threats could also be specified by users, but in a more static manner than in the EGS system described above. The simulated evacuees were given knowledge of specifiable parts of the building or area, would share information about routes and threats, would change their behavior based on levels of fear governed by the presence of threats and the calming guidance of official personnel and first responders, would move in family or colleague groupings, could move at different speeds and have different capacities for overcoming obstacles, and so on.

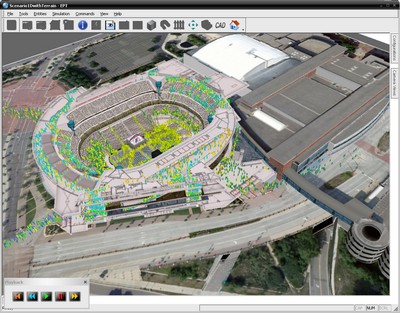

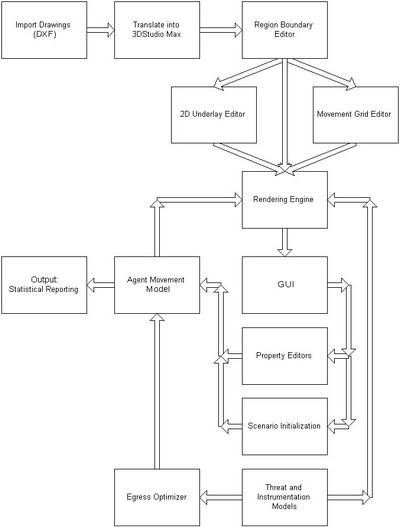

I designed all of the characteristics, behaviors, parameters, and user interfaces for the actors and regions modeled in the simulation, and also advocated that the tool be kept simple so advance personnel could develop models on the fly. What got built was a system that required complex 3D-rendered 3D Studio Max (now 3ds Max) models. That would be a nice feature to make things pretty when you had time to make a fancy demo, but it completely failed to meet the need specified in the contract. I was, however, overruled on that score as well, and I don't think they were ever able to free themselves of the SLX license for that product either. The initial customer, however, ultimately accepted it, and after I left the company was able to continue developing the product in conjunction with a university research department. They got major contracts to develop evacuation tools for NFL stadiums and other major sports arenas.

The evacuation products worked and were very pretty, but the company never did make the tool work in a low profile version the initial contract called for.

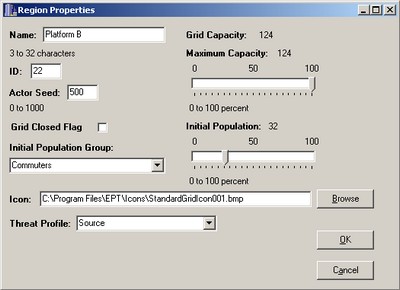

The parameter input mockup screens I designed served as part of the initial definition of how the tool would be built and which behaviors it would support.

Naturally I generated a lot more data, flow, and architecture diagrams.

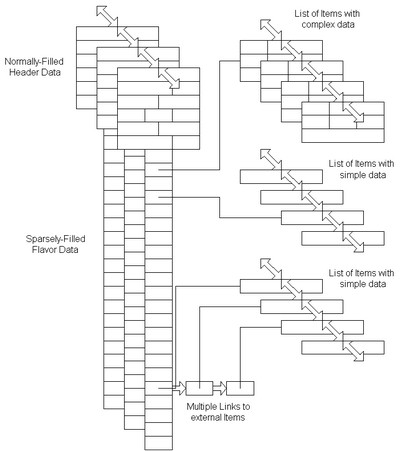

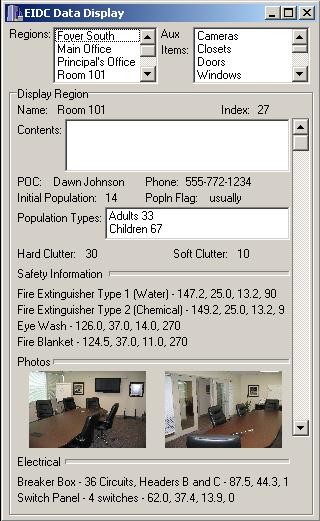

I also worked on a product meant to support first responders in connection with the National Incident Management System. It was supposed to combine mapping, navigation, communication, and coordination functions with building databases emergency personnel could use to know the layout of any building they encountered and the location of many features of potential interest inside or on the grounds. Data could include scheduled rosters of occupants by time of day, along with emergency contact information; utility and emergency hookups; location and nature of hazardous materials, and so on. The programmers came up with a shorthand building representation that defined sections of wall, with windows and doors as child entities, and also floors and ceilings. That worked fine most of the time but I quickly saw some goofy side-effects that I knew were going to lead to problems and unnecessary complexity later on. I was overruled and of course the issue I identified turned out to be a problem. I don't believe the initial effort led to any follow-up work.

Last but not least everyone in the company pitched in to develop sales proposals and marketing materials. We got exposure to goings-on in several industries that way.

We were always generating presentations and proposals. These are some of my materials.

I stayed with the company for eight years. I really do not like changing jobs and/or moving all the time, so I tried very hard not to make any changes if I didn't have to. In the beginning the company was growing and there were always new and interesting projects to work on and places to visit. I was getting recognition and raises and was having so much fun that I invited a co-worker I'd hired to work with me at Bricmont to consider working with me at Regal. He liked what he saw and moved down from Pittsburgh with his wife. Over time, however, things started to slow down. We got fewer new projects and lost some of the ongoing work we had.

One thing that happened about a year after I got to the company was that the owners decided they couldn't work together and several of them split off to form their own company. I found out about it via e-mail on the Friday morning of a week I was on vacation. The owners who left wanted to keep the company small and close-knit and the owner who stayed wanted to grow aggressively. That worked for a while but over time the management environment became increasingly difficult. People left the company under unpleasant circumstances over several years until it was less than a quarter of its maximum size. Some other people got themselves fired for drug problems, failure to show up for work, being generally disagreeable, or throwing partner companies under the bus in public status meetings. Things were... interesting. I stayed out of trouble until I inherited an effort that was doomed from the outset.

The remaining owner was (is) a very impressive guy who had supported several programs in Naval aviation, largely at Patuxent River Naval Air Station in southern Maryland. He knew a lot of the players down there and ended up working with a company that had grown too large to continue to compete as prime on contracts that had been designated as small business set-asides. Therefore our company assumed the role of prime and fronted the operation, and the friend I'd brought on was designated to be the program manager. Everyone worked together to manage the recompetes and the company won four separate task orders that were to run for five one-year periods. Three were at Pax River and one was at the Cherry Point Marine Corps Air Service depot in North Carolina. My friend read all the rules, got a local office set up in the larger company's building just outside the main gate at Pax River, and set about trying to manage the different jobs, one of which involved personnel from two additional contracting companies. The total budget was well over four million a year. Most of that was labor but a small amount was for travel and meetings.

This worked well for my friend, who was more interested in trying a management tack than continuing to grind on technical problems. The issue he encountered was that the large company we were working with refused to allow a sufficient number of its employees to become our employees, so the amount we were able to bill on most of the contracts was nowhere near the fifty percent that was supposed to be billed by the small-business prime. The government manager of one of the programs also managed to put two extra employees on one of the task orders so its budget was completely blown. As the first year came to a close one of the government task order managers wrote up a performance evaluation that gave our company terrible marks and largely ensured that we would never be able to win more contracts in that space. Indeed, we were lucky to maintain the ones we had. When my friend saw what was happening he departed the scene as quickly as he could, to take an offer with a company that another ex-Regal employee had gone to.

I had no knowledge of, experience with, or interest in any of it but by that time there wasn't anyone left besides me that could even try to take over. My friend gave his two-week notice on a Monday — when I was on a week-long data collection visit in North Dakota. The following week we were both swamped so that the only training and explanation I got for the role was on the final Friday he was there. He picked me up at 6:00 am and told me as much as he could on the 90 minute drive down to Pax River. Once there he took me around to all of the offices where the employees of the companies worked and where the government managers sat. He also took me over to the contracts office to introduce me to people there. We got back to the Regal office at 5:00 pm and spent the next three hours filling out invoices for that month's billing submissions. I later reconstructed the first year's invoices for all four jobs and found numerous errors. Over the next two-plus years I worked up numerous support spreadsheets that helped me keep track of everything that happened, perform predictive and EVM tracking, and ensure further errors could not be made. My successors later told me they were very grateful for the records and explanations I'd left behind.

Anyway, that was it, one long day of brain dump. The guy who left is still a good friend and was even in my wedding a year or so later, but this was a rough deal. I had a few things to accomplish: keep everything afloat, learn all I could, and find a replacement. I did manage to keep the thing afloat for two and a half years until a replacement was found that could actually handle the job and could be present enough (that individual was also replaced after I left), and I certainly learned a lot about things to do and things not to do in the future. I resisted doing things my senior management asked me to do that were not allowed by the contract. When the five-year contracts ended one by one the company quietly abandoned the office and all the furniture it had in southern Maryland. They were probably in serious arrears to the large company they were working with, a situation that had probably been going on even during much of the time I was still there. We all laugh about it now, but that was a rough and ridiculous stretch of time. I also had to take over as Facility Security Officer. That person is responsible for managing a company's security clearances and secured visits.

I learned later that the large ex-prime had also proposed that my next employer serve as prime on those Navy contracts, but their natural conservatism stood them in good stead. They thought the whole thing seemed fishy and chose not to get involved. That was a good call.

Near the end of my time the company also developed and tried to market a product called Fire Drill, which was a simulator that could be configured to reproduce the pump and electrical panels on a wide variety of firefighting vehicles. A great deal of money was expended (about half a million dollars) in its development and the product did work. I always give them credit for recognizing that the build once, sell once mode of project work can generate a decent cash flow but the build once, sell many mode of product work has the possibility of generating far more leverage. That said, the resulting product has to be placed correctly. (I knew of an old Pittsburgh start-up called GUIdance Technologies that created a brilliant product called Choreographer. They tried to sell it for $7,500 a seat just about the time Borland, Microsoft, and the Whitewater Group started selling the same capability for $500 or less per seat, and sometimes a lot less.) Fire Drill might have been a success as a pure software product that could be installed on any PC and if it had been much less expensive, but they would only sell it pre-installed on a dedicated laptop for several thousand dollars. They sold one copy.

My time came to an end when the headcount shrank to about fifteen employees (plus the Navy contractors), down from a max of one hundred. I talked to the owners of the company that had split off from Regal early on and was all but assured of a position. I was on a golf trip in Myrtle Beach, getting ready to head out for dinner with the guys when I saw a news crawl on TV that said the major contract I was going to support had been canceled. There went my out. I got formally laid off a couple of months later and joined the other company not too long after that, supporting the same kind of Navy programs I was supporting with the primed task orders at Pax River. About three years later Regal had just about run out of work and was competing against two other companies for a contract that would allow them to keep the doors open. One of the competitors was the company that had split off years earlier that I had gone to. My new company won that contract (without which they, too would have had to lay off several more people), and Regal's owners came in and announced that almost everyone in the company would be laid off. They kept the owners and two or three other people, and they no longer maintain a rented office as far a I can tell. They still maintain a web presence and may still be stringing some odds and ends along, but the company is effectively gone by this point.